Which LLM Produces the Best Code?

Evaluating LLMs for Code Generation: Which Model Delivers the Best Results?

Introduction

Overview

In this post, the Talentpath Research team set out to answer a key question: Which large language model (LLM) produces the best code?

We tested five models:

GPT-4o

GPT-3.5 Turbo

Claude-3.5 Sonnet

Claude-3 Sonnet

GitHub Copilot

To assess their performance, we followed a structured process:

We selected 10 coding challenges for the LLMs to solve.

Each generated script was programmatically tested against known test cases for accuracy.

We graded the LLM-generated code based on its ability to solve the challenges.

Finally, we evaluated the quality and maintainability of the code using coding-specific metrics.

Coding Metrics

We used four primary metrics to evaluate code quality and maintainability:

📊 Halstead Volume: Measures code complexity based on the number of unique operations and variable names (higher is worse).

🔄 Cyclomatic Complexity: Measures complexity based on branching logic (higher is worse).

📏 Lines of Code (LOC): Measures the number of lines in the code (higher is worse).

🔧 Maintainability Index: Evaluates how easy the code is to maintain (higher is better).

Human Baseline

To establish a benchmark, we had a mid-level software engineer solve the same coding challenges within an hour, under the same conditions as the LLMs. This provided a point of comparison for LLM performance.

Findings & Results

Overview

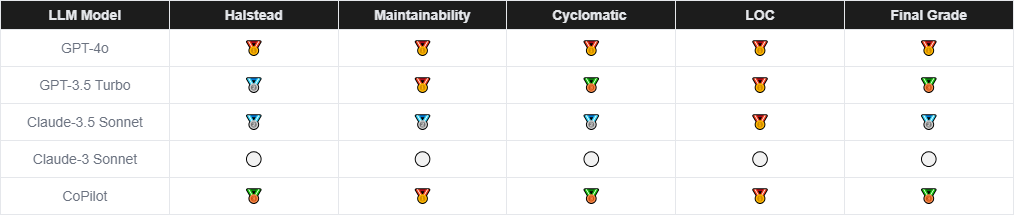

Here’s how the models stacked up against each other across the coding metrics:

Legend: #1 🥇, #2 🥈, #3🥉, #4 ⚪

Results Discussion

Code Accuracy: All LLMs successfully passed at least 90% of the test cases, proving they are capable of generating functional and reliable code.

Code Metrics: GPT-4o outperformed all other models across every metric, demonstrating both superior code quality and accuracy. Claude-3.5 Sonnet followed closely but missed some test cases that both OpenAI models handled successfully. GPT-3.5 Turbo showed slightly lower performance compared to GPT-4o, as expected, but even surpassed it on one specific test case. GitHub Copilot performed impressively, improving code metrics while retaining the accuracy of human-generated code. Since Copilot builds on local human inputs, its solutions closely mirrored the human operator's work with slight enhancements.

Unique Findings

Prompt Adherence: GPT-4o didn’t fully follow the prompt instructions, including unnecessary markdown along with the code. This was unique to GPT-4o and could be a quirk of its response handling that affects practical usability.

Common Mistake: Every LLM, except GPT-3.5 Turbo, made a similar error on one challenge—they assumed numbering started at 0 when it should’ve started at 1. This highlights the importance of prompt design and thorough code reviews.

Model Evolution: Newer models from OpenAI (GPT-4o) and Sonnet outperformed their older counterparts. The performance gap between Sonnet models was especially significant, with GPT-3 Sonnet trailing far behind.

Copilot's Strength: GitHub Copilot, heavily influenced by the human-generated code, performed well and was able to reach the same code coverage as the human operator. This suggests that Copilot, when paired with a skilled engineer, is a strong tool for improving efficiency and code quality.

Complexity of Solutions: For a particularly challenging question, the human coder provided a simple solution, while all the LLMs generated much more complex solutions that were roughly four times more intricate. This suggests that LLMs might over-rely on complex, learned patterns from training data, even when simpler solutions would suffice.

Key Takeaways

1. Improve Your Team's Coding with GitHub Copilot

GitHub Copilot is a game-changer for enhancing human performance. It improves code quality across all metrics, offering the best of both worlds: reduced code complexity and increased maintainability, while retaining the simplicity and accuracy of human-created code.

TL;DR: Copilot mitigates many risks associated with LLMs while providing significant coding benefits. Empowering your team with a Copilot license is a clear win for productivity and code quality.

2. Using LLMs to Create Code

While LLMs are good at generating functional code, they still require guardrails and code review processes to ensure quality. Simple errors, like the incorrect numbering assumption, show the need for more careful prompt design.

A recommended approach:

Step 1: Break tasks into sub-steps. Start by generating a clear list of requirements.

Step 2: Then use that list to generate the code. This avoids potential pitfalls of one-shot generation* and increases code quality by focusing on key details.

*Based on an earlier research post we have demonstrated LLMs struggle with too much context

3. Choosing the Right Model: GPT-4o vs. GPT-3.5 Turbo

GPT-4o is the clear winner in terms of overall performance. It consistently performed at the top of all tests and coding metrics.

Cost Efficiency: GPT-4o is slightly cheaper than Claude-3.5 Sonnet, making it a strong choice for teams looking for cost-effective, high-quality code generation.

Affordability Consideration: While GPT-3.5 Turbo didn’t perform as well as GPT-4o, it offers excellent value for money. Given its lower cost, it’s a strong contender for teams operating under tighter budgets.

Cost comparison: GPT-4o costs $2 per input million tokens (Mtok) and $10 per output Mtok, while Anthropic’s Claude-3.5 Sonnet models cost $3 and $15 per Mtok respectively, representing a 50% price difference.

Conclusion

In conclusion, while all the tested LLMs are capable of producing functional code, GPT-4o emerges as the top performer across both accuracy and code quality metrics. GitHub Copilot shows strong potential as a tool to augment human coding efforts, blending the advantages of machine-generated code with human oversight. For cost-conscious teams, GPT-3.5 Turbo provides a solid balance between price and performance, making it a viable alternative for code generation.

Our research team is always looking to tackle new research! If you have any direct feedback or requests for further research please reach out to jean@talentpath.co or visit our website for more information about our team.

Authors

Jean Turban & Bryan Navarro